Last Monday we conducted two experiments. The first was used to measure the relative heat of oil and water using the equation H = Cp*m*∆T, which determines the specific heat capacity, or how much energy is needed to raise the temperature of the substance one degree Celsius. The equation breaks down as this:

ΔE: Amount of energy absorbed (J)

m: mass of a liquid (g)

c: Heat capacity: (J/g-K)

ΔT : change in temperature (K)

r: mass density (g/ml)

Before the experiment we found the mass density of oil (.92) and water (1.0) as well as the specific heat of oil (2.0 J) and water (4.184 J).

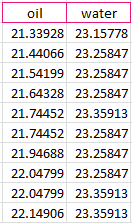

For the actual experiment we added 80ml of oil and water to their own beakers and placed them on a hot plate, at which point we put in a temperature probe connected to the computer to record the data. Because of some issues with the hot plate where it was not heating as much as it should have the liquids did not change temperature as much as was needed, and according to the data, our water had a higher temperature than the cooking oil. Given the properties of oil and water and how oil heats up faster, we can only determine that this was an error.

After the data was recorded, we calculated the difference of temperature from the start of the experiment to the end, and received a difference of .80978 degrees Celsius for the oil and .201135 degrees Celsius for the water.

We then calculated it further, which required the mass density and specific heat, and received an energy calculation of 67.39621 J for water and 119.1996 J for oil. The difference is 55.52473%, which demonstrates that oil needs more energy than water to be heated one degree. This however makes no sense considering that oil is less dense than water and therefore needs less energy to be heated, once again showing faulty data.

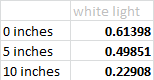

The second experiment focused on solar energy and what colors of light produce the most energy. For this we used a solar panel and sensor and a flashlight, as well as colored pieces of film that we held over the light. We initially experimented with the height of the flashlight to see how the distance between the sensor and the light affected the amount of energy produced. Predictably, with the height we determined that the further away the light was from the sensor, the less voltage was produced.

We used six colors total: teal, orange, pink, indigo, green and white (no filter) held at a height of zero inches from the sensor. By averaging the voltage produced we determined that orange had the highest amount of energy, while unfiltered light had the least.