By Dario Hernandez

Picture this: Richard Nixon is giving a presidential address where he announces to the world that the Apollo 11 moon landing did not happen but instead ended in tragedy. At this point, one would simply roll their eyes because such an address never happened and to entertain such a notion would be ludicrous. Yet such a video exists. If this alternate presidential address were to have been released back in 1969 it would have caused great confusion as many were eagerly waiting to see Neil Armstrong’s famous “giant leap for mankind.” The possibility of spreading fake videos that distort the truth is rapidly becoming far more tangible. While the spread of false information has already been seen as a threat to many, there is a larger danger looming.

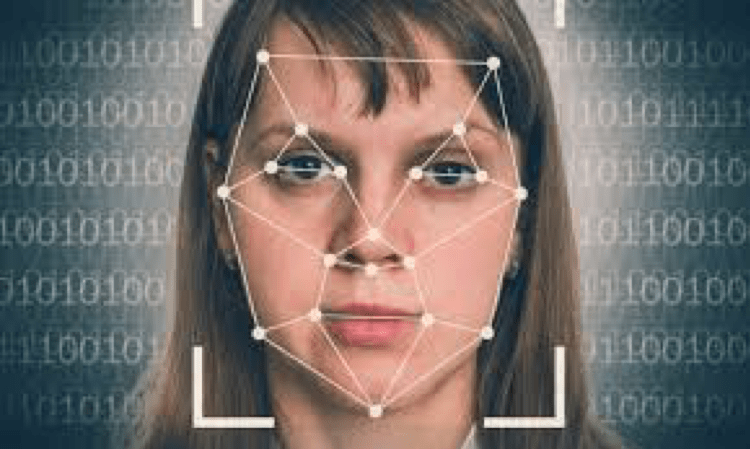

The ability to make a video where the individual in the video is saying or doing something that never happened is now entirely possible. This advance in video editing and media production was originally intended to usher in a new era of cinematography. The return of Princess Leia in Star Wars’ Rogue One was seen as an incredible use of technology that brought back a beloved character. Robert De Niro and Al Pacino will be portrayed as younger versions of themselves in Martin Scorsese’s The Irishman. Computer-Generated Imagery, or CGI, enables filmmakers to create movies that use digitally edited videos to enhance a moviegoer’s experience. It opens a whole new realm of filmmaking that can change the way we view movies.

But with such a great advance in image editing comes its lesser-known evil twin. The Pentagon has begun to take a more serious look at fake videos, known as deepfake videos, that may be used to spread misinformation and can be used to manipulate a large audience into believing something that is entirely false. The House Intelligence Committee held an open hearing on deepfakes and artificial intelligence. There is a sense that Congress and the Pentagon have been caught off-guard as House Representative Adam B. Schiff, the Chairman of the Intelligence Committee, stated that, “I don’t think we’re well prepared at all. And I don’t think the public is aware of what’s coming.” The consequence of such a lack of preparedness can already be seen across the globe.

In Central Africa’s Gabon, the President had been rumored to be in bad health and many were beginning to doubt his ability to continue to lead the country. To quell the rumors, the government released a video that was soon enough believed to be a deepfake video of the mysterious president. Shortly after, the military tried to overthrow the president using the fake video as evidence of a need for change. In Malaysia, a deepfake video was spread that showed a cabinet minister confessing to a sexual allegation. Additionally, here in the United States, a video was circulated that portrayed the House Speaker Nancy Pelosi with her speech slurred and impaired. It is not hard to imagine the spread of a deepfake video where Donald Trump announces he is declaring war on China or Mexico. Such is the threat and real danger of deepfake videos.

There are various legal considerations that ought to be taken to further inform the proper response. Pointedly, deepfakes do implicate the First Amendment of the United States’ Constitution given that a flat out ban of all deepfakes may be resisted by those who would argue such a ban would be contrary to freedom of expression. In United States v. Alvarez, 132 S. Ct. 2537 (2012), the Supreme Court reasoned that “falsity alone” is not enough to quell the First Amendment’s protections. Still, one could argue that fake videos undermine free speech as they distort an expression into something that denies the general public access to truthful information.

Additionally, there are legal remedies available to those smeared by the spread of fake videos, such as defamation and tort law that provide some legal recourse. Some states have criminalized impersonations and there are certain privacy laws that may offer some protection. Nevertheless, there is still more action needed to protect the general public from harmful false information. Congress ought to act to provide great funding to research ways of spotting deepfakes as well as removing such videos from being viewed by everyone. The solution is far from clear, but it is clear that more steps ought to be taken to avoid the spread of dangerous false information. Inaction only serves to empower the evil twin lurking in the depths of the internet.

Student Bio: Dario Hernandez is a staff member of the Journal of High Technology Law. He is currently a 2L pursuing his JD/MBA at Suffolk University Law School and holds a B.A. in Sociology and Theology from Boston College.

Disclaimer: The views expressed in this blog are the views of the author alone and do not represent the views of JHTL or Suffolk University Law School.